Morality, Stereotypes, and Scientists: the Anatomy of Science Denial

Though 87% of scientists believe that human activity causes climate change, only half of the general population shares this view, according to a well-known Pew Research Center report. On the issue of GMO food safety, there is a staggering 51% difference of opinion between AAAS scientists and the public. The featured image in this post, which shows a banana being injected with blue liquid, likely illustrates some public misconceptions about GMOs. These numbers indicate communication between scientists and the public has broken down, but why? Research from Fiske and Dupree suggests that the general public respects scientists, but does not trust them. Todd Pittinsky also suggests that while scientists trust and understand the validity of the scientific method, the public does not. PLOS Blogs has covered the issue of public perceptions of scientific issues, ranging from climate change and vaccines, and GMOs. But there is some good news; according to Lewandowsky et al., people tend to reject science as a whole, not just particularly contentious topics. So instead of trying to tackle each individual topic, perhaps we only need to inspire trust in scientists and the scientific process, and the rest will fall into line from there.

A Bit About Morality

Before we can talk about engendering trust in science, we first need to address how people perceive others in general. Morality often acts as the guiding rubric by which people judge the actions and motivations of their peers. A useful model for morality is moral foundations theory, which proposes that values come from five core principles, called foundations. These five foundations can be grouped into two categories: individualizing foundations and binding foundations. Individualizing foundations center on protecting the individual rights of others. Binding foundations center on roles and duties to foster harmony and cohesion in society.

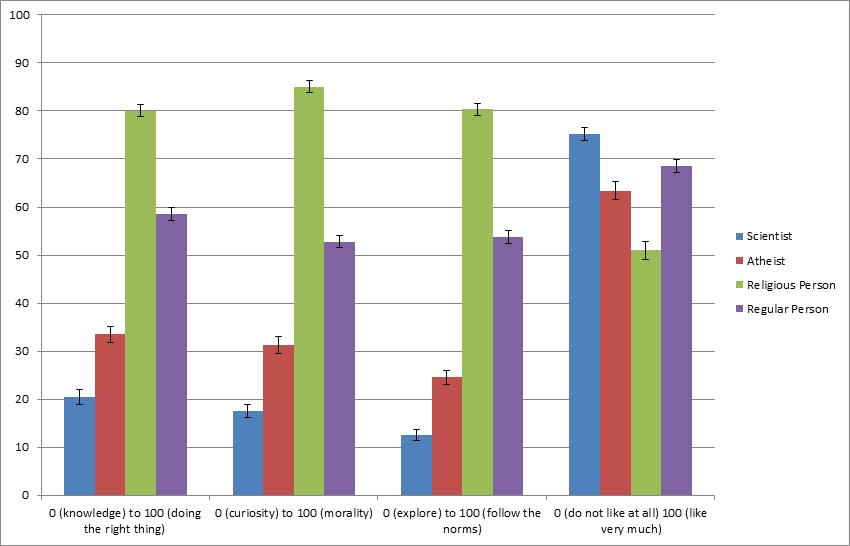

A recent study in PLOS ONE surveyed Amazon Mechanical Turk users to gain insight into the users’ perceptions of scientists. Surveyed individuals responded that they thought scientists were more likely than laypeople to act against the binding foundations, but not more likely to act against the individualizing foundations. As seen in Figure 1, Turk users also thought that scientists prioritize knowledge and curiosity over morality. The authors conclude that people view scientists as amoral (lacking morals), rather than immoral (willfully violating morals).

A Bit About Stereotypes

Individuals tend to group others based on their perceived morality, often employing stereotypes to describe individuals or groups of people beliveved to have different morals or values. According to Fiske et al., stereotypes are well described using two dimensions: warmth and competence. Warmth (or lack of it) refers to the perceived positive/negative intent of another person, while competence refers to the other person’s capacity to achieve their intent. Using this terminology, the ingroup, or the group that you belong to, is both warm and competent, and thus trustworthy. Stereotypes with high perceived competence and low perceived warmth, including stereotypically wealthy individuals, are often not trusted because perceived intent is either unknown or negative. Similarly, scientists have unclear intent due to their perceived amorality, and they are not trusted.

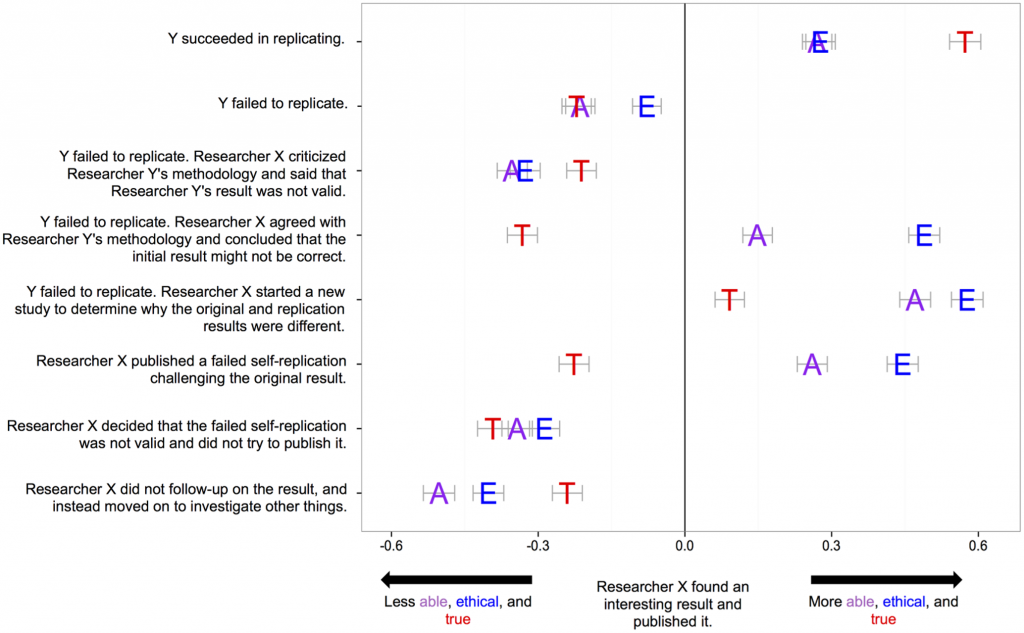

A second study in PLOS Biology examined how the public judges the competency of scientists. The authors presented hypothetical scenarios involving a study by researcher X and subsequent replication attempts, to participants recruited through SoapBox Sample. One interesting conclusion is that the validity of a study’s results is not entirely correlated with beliefs about the researcher’s ability and ethics. What does have a greater impact on perceptions of the ability and ethics of research X is how gracefully researcher X responds to replication attempts by researcher Y. In rows two and four of Figure 2, “truth” decreases because the replication failed, but “ability” and “ethics” increases because researcher X responds gracefully. In contrast, all three qualities decrease in rows three and seven because replication attempts failed and researcher X responded ungracefully. Clearly, the public believes that the way a scientist responds to replication is an important factor in determining a scientist’s competence.

Creating Trust

From these two studies, we can conclude that the public perceives scientists as competent, but not warm. These perceptions provide clear reasons why scientists are not trusted. I believe that in order to incur more trust from the public, scientists must cultivate more warmth from the public.

I propose two ways to achieve this goal. First scientists need to make their intentions clear. Social psychologist Todd Pittinsky, mentioned in the introduction, has some terrific ideas on how to clarify intentions. One strategy is open access to data and methods, which is readily achieved through open access publishing. Scientists also need to treat misconduct by other scientists more seriously so that people don’t, for example, deem that all vaccine science is fraud due to one case of misconduct. Finally, we need to treat science denial without disdain and acknowledge uncertainty properly when describing scientific results.

Second, scientists need to move into the ingroup sphere by imitating those already in the ingroup. Kahan et al. point out that an individual’s established ideology greatly influences how they process new information. I would suggest scientists frame their findings in a way that fits with the audience’s ideology, thus promoting “warmth”. For example, the Pew report that reveals 37% of the public thinks that GMOs are not safe, which violates the individual foundations. Highlighting how certain crops can be genetically engineered for health (e.g. rice that is genetically engineered to produce beta carotene) shows how GMOs can be compatible with individual foundations. Behaving like an ingroup can then move scientists into the ingroup sphere.

Battling misinformation is definitely an uphill climb, but it is a climb scientists must endeavor to make. Climate change denial and the anti-vaccination movement threatens the future of scientific progress, and while the danger cannot be ignored, we should not belittle non-scientific ideas. Scientists can build goodwill through increased transparency and communicating the significance of their findings to the public. By taking other worldviews into account, we can find common ground and create open dialogue and perhaps find solutions to benefit everyone.

Featured Image: Though scientists are generally in agreement about the safety of GMOs, the general public is skeptical. Photo courtesy of Flickr user antoinecouturier.

References and Further Reading

Ebersole CR, Axt JR, Nosek BA (2016) Scientists’ Reputations Are Based on Getting It Right, Not Being Right. PLOS Biol 14(5): e1002460. doi:10.1371/journal.pbio.1002460

Fiske ST, Cuddy AJ, Glick P, Xu J (2002) A model of (often mixed) stereotype content: Competence and warmth respectively follow from perceived status and competition. J. Pers. Soc. Psychol. 82(6): 878-902.

Fiske ST, Dupree C (2014) Gaining trust as well as respect in communicating to motivated audiences about science topics. Proc. Nat. Acad. Sci. 111: 13593-13597.

Graham J, Haidt J, Nosek BA (2009) Liberals and conservatives rely on different sets of moral foundations. J. Pers. Soc. Psychol. 96(5): 1029-1046.

Haidt J, Graham J (2007) When Morality Opposes Justice: Conservatives Have Moral Intuitions that Liberals may not Recognize. Soc. Justice. Res. 20(1): 98-116.

Kahan DM, Jenkins-Smith H, Braman D (2010) Cultural Cognition of Scientific Consensus. J Risk Res. 14: 147-174.

Lewandowsky S, Oberauer K, Gignac GE NASA Faked the Moon Landing—Therefore, (Climate) Science Is a Hoax: An Anatomy of the Motivated Rejection of Science. Psychol. Sci. 24(5): 622-633.

Pew Research Center, January 29, 2015, Public and Scientists’ Views on Science and Society.

Pittinsky TL (2015) America’s crisis of faith in science. Science 348: 511-512.

Rutjens BT, Heine SJ (2016) The Immoral Landscape? Scientists Are Associated with Violations of Morality. PLOS ONE 11(4): e0152798. doi:10.1371/journal.pone.0152798.

Tversky A, Kahneman D (1983) Extensional versus intuitive reasoning: The conjunction fallacy in probability judgment. Psychol. Rev. 90(4): 293-315.

[…] Source: Morality, Stereotypes, and Scientists: the Anatomy of Science Denial […]

[…] a hefty price tag of approximately $28.2 billion. In addition, this issue could contribute to the public’s mistrust of scientists and the scientific process, which would threaten the future of scientific […]

[…] a hefty price tag of approximately $28.2 billion. In addition, this issue could contribute to the public’s mistrust of scientists and the scientific process, which wouldthreaten the future of scientific […]